Striking the Right Balance: Systematic Assessment of Evaluation Method Distribution Across Contribution Types

Feng Lin - University of North Carolina at Chapel Hill, Chapel Hill, United States

Arran Zeyu Wang - University of North Carolina-Chapel Hill, Chapel Hill, United States

Md Dilshadur Rahman - University of Utah, Salt Lake City, United States

Danielle Albers Szafir - University of North Carolina-Chapel Hill, Chapel Hill, United States

Ghulam Jilani Quadri - University of Oklahoma, Norman, United States

Screen-reader Accessible PDF

Download preprint PDF

Room: Bayshore I

2024-10-14T16:00:00ZGMT-0600Change your timezone on the schedule page

2024-10-14T16:00:00Z

Full Video

Abstract

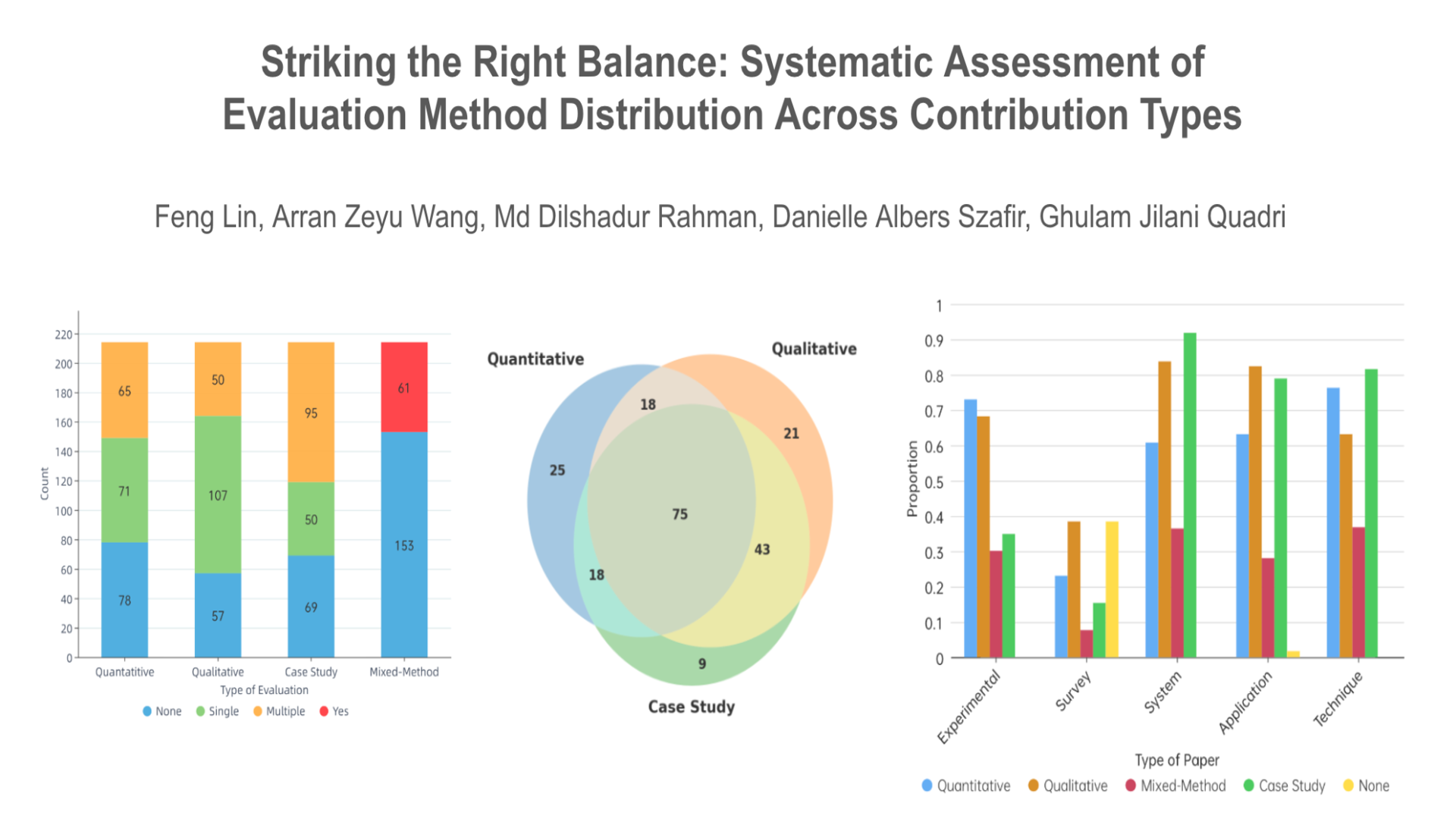

In the rapidly evolving field of information visualization, rigorous evaluation is essential for validating new techniques, understanding user interactions, and demonstrating the effectiveness of visualizations. The evaluation of visualization systems is fundamental to ensuring their effectiveness, usability, and impact. Faithful evaluations provide valuable insights into how users interact with and perceive the system, enabling designers to make informed decisions about design choices and improvements. However, an emerging trend of multiple evaluations within a single study raises critical questions about the sustainability, feasibility, and methodological rigor of such an approach. So, how many evaluations are enough? is a situational question and cannot be formulaically determined. Our objective is to summarize current trends and patterns to understand general practices across different contribution and evaluation types. New researchers and students, influenced by this trend, may believe-- multiple evaluations are necessary for a study. However, the number of evaluations in a study should depend on its contributions and merits, not on the trend of including multiple evaluations to strengthen a paper. In this position paper, we identify this trend through a non-exhaustive literature survey of TVCG papers from issue 1 in 2023 and 2024. We then discuss various evaluation strategy patterns in the information visualization field and how this paper will open avenues for further discussion.