VoxAR: Adaptive Visualization of Volume Rendered Objects in Optical See-Through Augmented Reality

Saeed Boorboor -

Matthew S. Castellana -

Yoonsang Kim -

Zhutian Chen -

Johanna Beyer -

Hanspeter Pfister -

Arie E. Kaufman -

DOI: 10.1109/TVCG.2023.3340770

Room: Bayshore II

2024-10-16T12:54:00ZGMT-0600Change your timezone on the schedule page

2024-10-16T12:54:00Z

Fast forward

Full Video

Keywords

Adaptive Visualization, Situated Visualization, Augmented Reality, Volume Rendering

Abstract

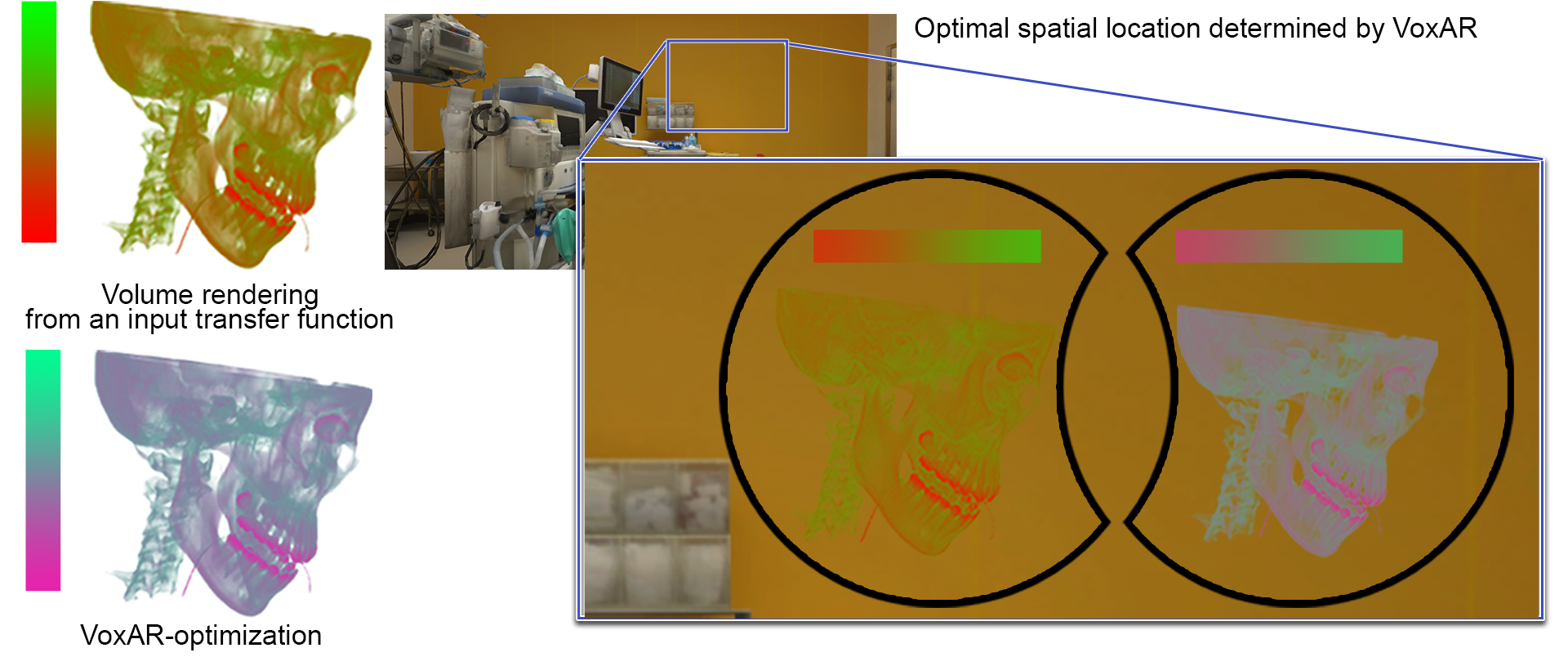

We present VoxAR, a method to facilitate an effective visualization of volume-rendered objects in optical see-through head-mounted displays (OST-HMDs). The potential of augmented reality (AR) to integrate digital information into the physical world provides new opportunities for visualizing and interpreting scientific data. However, a limitation of OST-HMD technology is that rendered pixels of a virtual object can interfere with the colors of the real-world, making it challenging to perceive the augmented virtual information accurately. We address this challenge in a two-step approach. First, VoxAR determines an appropriate placement of the volume-rendered object in the real-world scene by evaluating a set of spatial and environmental objectives, managed as user-selected preferences and pre-defined constraints. We achieve a real-time solution by implementing the objectives using a GPU shader language.Next, VoxAR adjusts the colors of the input transfer function (TF) based on the real-world placement region. Specifically, we introduce a novel optimization method that adjusts the TF colors such that the resulting volume-rendered pixels are discernible against the background and the TF maintains the perceptual mapping between the colors and data intensity values. Finally, we present an assessment of our approach through objective evaluations and subjective user studies.