Submerse: Visualizing Storm Surge Flooding Simulations in Immersive Display Ecologies

Saeed Boorboor -

Yoonsang Kim -

Ping Hu -

Josef Moses -

Brian Colle -

Arie E. Kaufman -

DOI: 10.1109/TVCG.2023.3332511

Room: Bayshore VII

2024-10-16T14:15:00ZGMT-0600Change your timezone on the schedule page

2024-10-16T14:15:00Z

Fast forward

Full Video

Keywords

Camera navigation, flooding simulation visualization, immersive visualization, mixed reality

Abstract

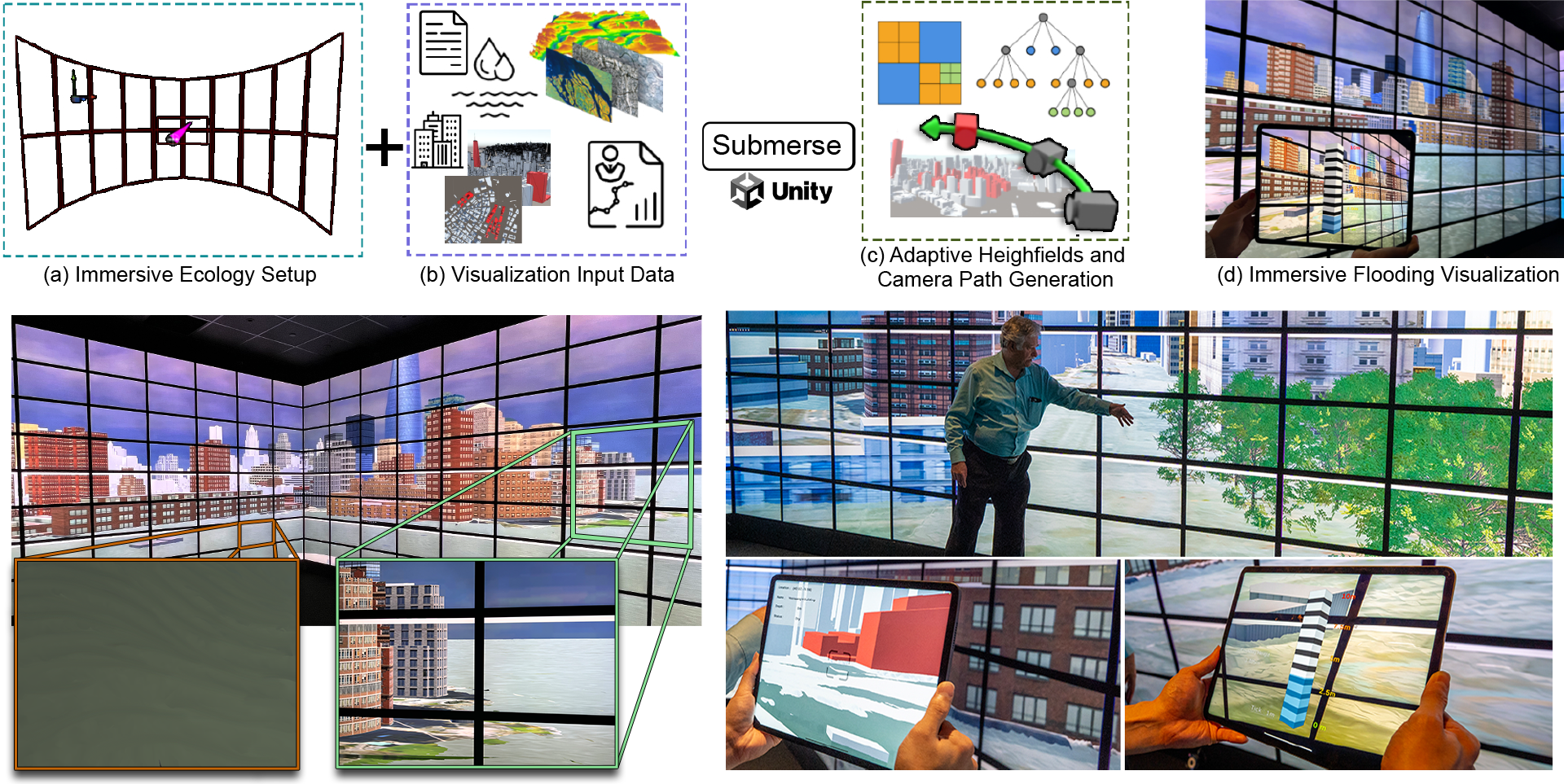

We present Submerse, an end-to-end framework for visualizing flooding scenarios on large and immersive display ecologies. Specifically, we reconstruct a surface mesh from input flood simulation data and generate a to-scale 3D virtual scene by incorporating geographical data such as terrain, textures, buildings, and additional scene objects. To optimize computation and memory performance for large simulation datasets, we discretize the data on an adaptive grid using dynamic quadtrees and support level-of-detail based rendering. Moreover, to provide a perception of flooding direction for a time instance, we animate the surface mesh by synthesizing water waves. As interaction is key for effective decision-making and analysis, we introduce two novel techniques for flood visualization in immersive systems: (1) an automatic scene-navigation method using optimal camera viewpoints generated for marked points-of-interest based on the display layout, and (2) an AR-based focus+context technique using an aux display system. Submerse is developed in collaboration between computer scientists and atmospheric scientists. We evaluate the effectiveness of our system and application by conducting workshops with emergency managers, domain experts, and concerned stakeholders in the Stony Brook Reality Deck, an immersive gigapixel facility, to visualize a superstorm flooding scenario in New York City.