A Deixis-Centered Approach for Documenting Remote Synchronous Communication around Data Visualizations

Chang Han - University of Utah, Salt Lake City, United States

Katherine E. Isaacs - The University of Utah, Salt Lake City, United States

Screen-reader Accessible PDF

Download preprint PDF

Room: Bayshore V

2024-10-16T16:36:00ZGMT-0600Change your timezone on the schedule page

2024-10-16T16:36:00Z

Fast forward

Full Video

Keywords

Taxonomy, Models, Frameworks, Theory ; Collaboration ; Communication/Presentation, Storytelling

Abstract

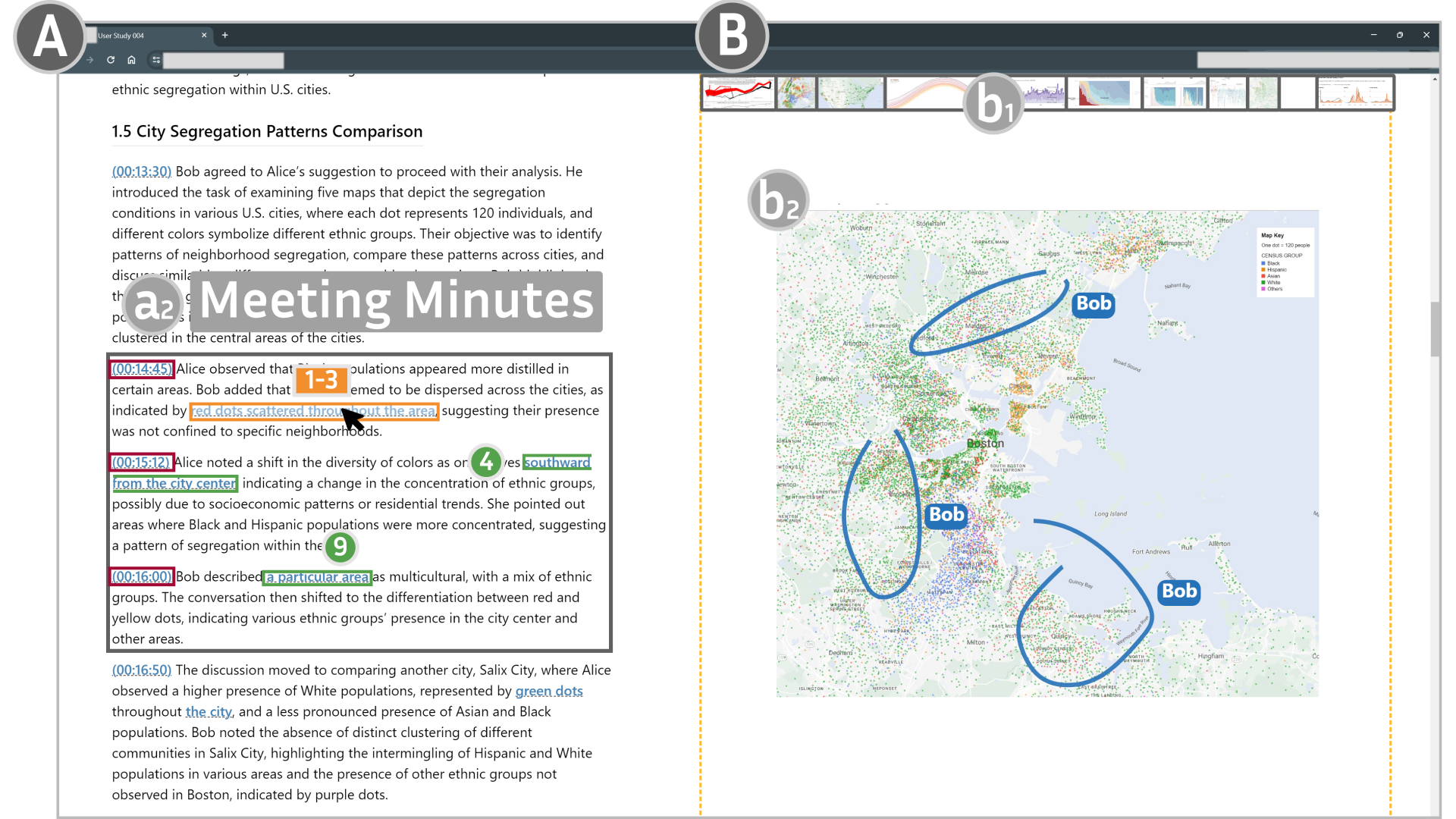

Referential gestures, or as termed in linguistics, deixis, are an essential part of communication around data visualizations. Despite their importance, such gestures are often overlooked when documenting data analysis meetings. Transcripts, for instance, fail to capture gestures, and video recordings may not adequately capture or emphasize them. We introduce a novel method for documenting collaborative data meetings that treats deixis as a first-class citizen. Our proposed framework captures cursor-based gestural data along with audio and converts them into interactive documents. The framework leverages a large language model to identify word correspondences with gestures. These identified references are used to create context-based annotations in the resulting interactive document. We assess the effectiveness of our proposed method through a user study, finding that participants preferred our automated interactive documentation over recordings, transcripts, and manual note-taking. Furthermore, we derive a preliminary taxonomy of cursor-based deictic gestures from participant actions during the study. This taxonomy offers further opportunities for better utilizing cursor-based deixis in collaborative data analysis scenarios.