StyleRF-VolVis: Style Transfer of Neural Radiance Fields for Expressive Volume Visualization

Kaiyuan Tang - University of Notre Dame, Notre Dame, United States

Chaoli Wang - University of Notre Dame, Notre Dame, United States

Download preprint PDF

Room: Bayshore I

2024-10-16T12:54:00ZGMT-0600Change your timezone on the schedule page

2024-10-16T12:54:00Z

Fast forward

Full Video

Keywords

Style transfer, neural radiance field, knowledge distillation, volume visualization

Abstract

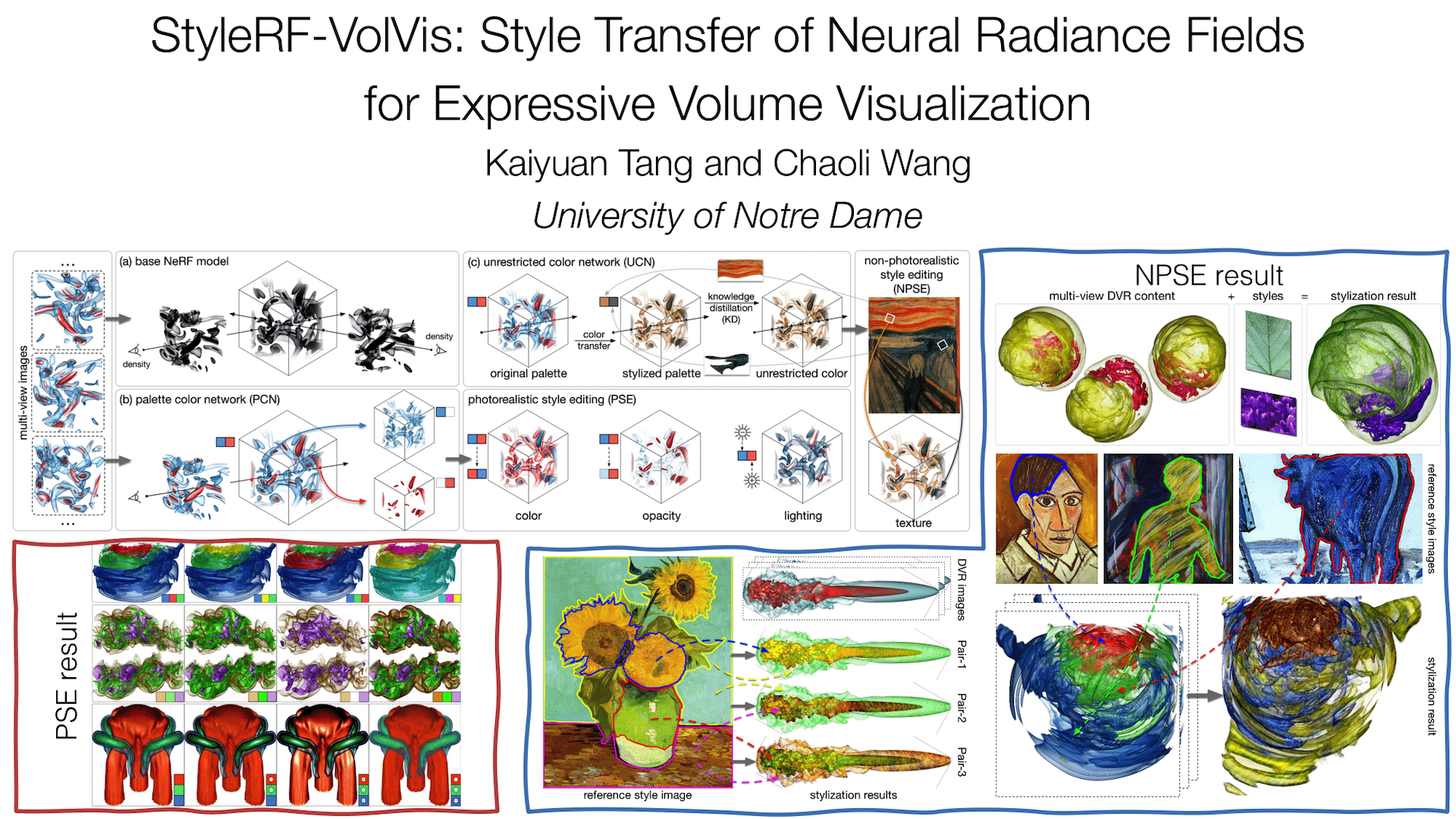

In volume visualization, visualization synthesis has attracted much attention due to its ability to generate novel visualizations without following the conventional rendering pipeline. However, existing solutions based on generative adversarial networks often require many training images and take significant training time. Still, issues such as low quality, consistency, and flexibility persist. This paper introduces StyleRF-VolVis, an innovative style transfer framework for expressive volume visualization (VolVis) via neural radiance field (NeRF). The expressiveness of StyleRF-VolVis is upheld by its ability to accurately separate the underlying scene geometry (i.e., content) and color appearance (i.e., style), conveniently modify color, opacity, and lighting of the original rendering while maintaining visual content consistency across the views, and effectively transfer arbitrary styles from reference images to the reconstructed 3D scene. To achieve these, we design a base NeRF model for scene geometry extraction, a palette color network to classify regions of the radiance field for photorealistic editing, and an unrestricted color network to lift the color palette constraint via knowledge distillation for non-photorealistic editing. We demonstrate the superior quality, consistency, and flexibility of StyleRF-VolVis by experimenting with various volume rendering scenes and reference images and comparing StyleRF-VolVis against other image-based (AdaIN), video-based (ReReVST), and NeRF-based (ARF and SNeRF) style rendering solutions.