Standardized Data-Parallel Rendering Using ANARI

Ingo Wald - NVIDIA, Salt Lake City, United States

Stefan Zellmann - University of Cologne, Cologne, Germany

Jefferson Amstutz - NVIDIA, Austin, United States

Qi Wu - University of California, Davis, Davis, United States

Kevin Shawn Griffin - NVIDIA, Santa Clara, United States

Milan Jaroš - VSB - Technical University of Ostrava, Ostrava, Czech Republic

Stefan Wesner - University of Cologne, Cologne, Germany

Room: Bayshore II

2024-10-13T16:00:00ZGMT-0600Change your timezone on the schedule page

2024-10-13T16:00:00Z

Abstract

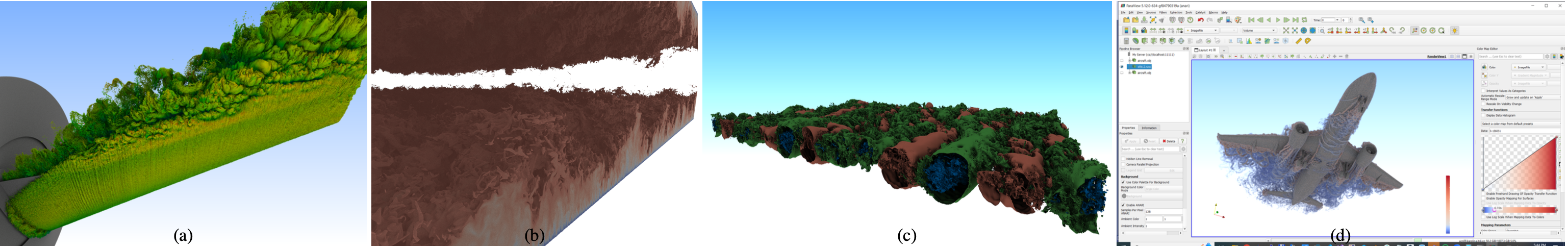

We propose and discuss a paradigm that allows for expressing data- parallel rendering with the classically non-parallel ANARI API. We propose this as a new standard for data-parallel rendering, describe two different implementations of this paradigm, and use multiple sample integrations into existing applications to show how easy it is to adopt, and what can be gained from doing so.